Did gyre and gimble in the wabe:

All mimsy were the borogoves,

And the mome raths outgrabe.

— Lewis Carroll, “Jabberwocky”

From its publication in 1871 in “Through the Looking-Glass,” Lewis Carroll’s sequel to “Alice’s Adventures in Wonderland,” “Jabberwocky” has intrigued adults even more than the children for whom Carroll wrote it, and with good reason: His wordplay returns us to the days of our own youth, when we delighted in learning new words and the relations between them, because, in doing so, we came to see the world through different eyes. A child, on the other hand, who is reading “Through the Looking-Glass” for the first time, is just as likely to marvel at the rest of Carroll’s play with words which, for us adults, have long since been robbed of the sense of newness and pregnant meaning that they held for us as kids.

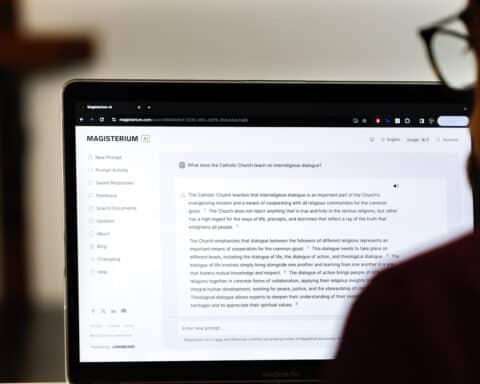

In my last column, discussing ChatGPT and generative artificial intelligence in general, I mentioned Owen Barfield’s insight that the unexpected juxtaposition of two or more words tickles our fancy and allows us to uncover some bit of reality that we haven’t noticed before.

Carroll, of course, was writing some three decades before Barfield was even born, but he seems to have anticipated Barfield’s point. What makes “Jabberwocky” so fascinating is our sense that we can understand what the poem is saying, even though nearly all of the nouns and adjectives, and even a significant number of the verbs, were pure inventions of Carroll’s. (As if to prove that our sense is right, at least two of the verbs — chortled and galumphing — entered the language shortly after Carroll first wrote them and remain in common use 150 years later.)

Understanding that ChatGPT operates on the premise that there is a next logical word to place after the current sequence of words, I suspected that it would choke when I asked it to “Write a poem in the style of Lewis Carroll’s ‘Jabberwocky.'” What I didn’t expect to see was “‘Twas brillig,” followed by every word of the first stanza, in the original order. But, of course, that makes a certain sense: While GPT-3 has been trained to recognize patterns across billions of words, “Jabberwocky” breaks that model. There’s only one “Jabberwocky,” so ChatGPT doesn’t know how to write another poem in its style. The best it can do is recreate the original.

When other people are worried that ChatGPT and its AI cousins may be coalescing into an incipient Skynet, pointing out that ChatGPT cannot write a “nonsense poem” may seem like the weakest of all possible criticisms. But the real danger of generative AI isn’t that it will learn to think for itself; it’s that we, in our desire to replace the God that modern man no longer believes in, will invest AI models with a depth of intelligence and understanding that they will never be capable of achieving. Already, we see computer scientists speaking in hushed and reverent tones of being unable to understand how the models they built and fed actually work. But there’s nothing mysterious about what ChatGPT is doing: It analyzes what came before in order to spit out more of the same.

In that sense, generative AI is a caricature of the worst kind of conservatism: one unable to generate new ideas and new understandings to apply timeless truths to new social, political and economic challenges.

No wonder that the noted linguist Noam Chomsky, for many decades a man firmly of the left, wrote disparagingly of generative AI recently in the pages of The New York Times. “The human mind,” Chomsky noted, “is not, like ChatGPT and its ilk, a lumbering statistical engine for pattern matching, gorging on hundreds of terabytes of data and extrapolating the most likely conversational response or most probable answer to a scientific question. On the contrary, the human mind is a surprisingly efficient and even elegant system that operates with small amounts of information; it seeks not to infer brute correlations among data points but to create explanations.”

As usual, even when he is mostly right, Chomsky is just a bit off. The human mind is not a system but the manifestation of a soul made in the image and likeness of the divine Word, and as such, it seeks not to “create explanations” but to understand the Truth that has spoken it into being. That is something that ChatGPT will never be able to do, nor will it be able to understand why it cannot. But we can.

Scott P. Richert is publisher for OSV.